Keynotes

SALSA and PICANTE: Machine learning-based attacks on LWE with sparse binary secrets

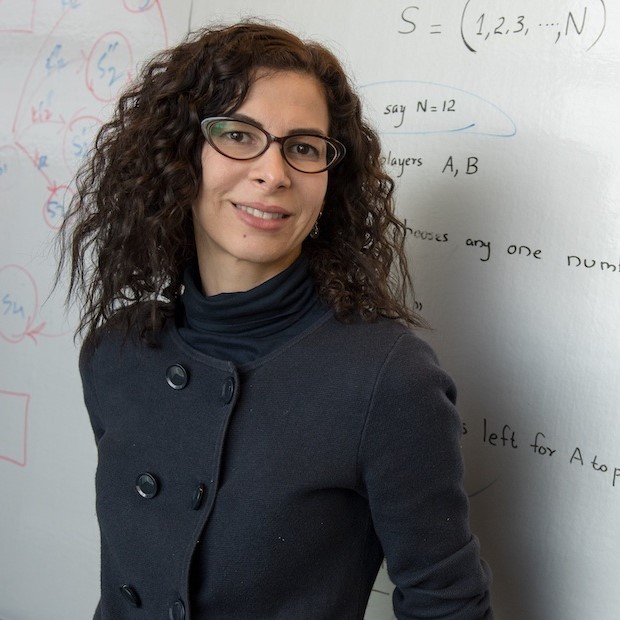

Kristin Lauter, Meta AI, USA

Learning with Errors (LWE) is a hard math problem underpinning many proposed post-quantum cryptographic (PQC) systems. The only PQC key exchange standardized by NIST is based on module LWE, and current publicly available PQ Homomorphic Encryption (HE) libraries are based on ring LWE. The security of LWE-based PQ cryptosystems is critical, but certain implementation choices could weaken them. One such choice is sparse binary secrets, desirable for PQ HE schemes for efficiency reasons.

This talk will discuss our efforts to develop machine learning-based attacks against LWE schemes with sparse binary secrets. Our initial work, SALSA, demonstrated a proof of concept machine learning-based attack on LWE with sparse binary secrets in small dimensions (n<129) and low Hamming weights (h<5). Our more recent work, PICANTE, recovers secrets in much larger dimensions (up to n=350) and with larger Hamming weights (roughly n/10, and up to h=60 for n=350). We achieve this dramatic improvement via a novel preprocessing step, which allows us to generate training data from a linear number of eavesdropped LWE samples (4n) and changes the distribution of the data to improve transformer training. We also improve the secret recovery methods of SALSA and introduce a novel cross-attention recovery mechanism allowing us to read off the secret directly from the trained models. While PICANTE does not threaten NIST's proposed LWE standards, it demonstrates significant improvement over SALSA and could scale further, highlighting the need for future investigation.

Kristin Estella Lauter is an American mathematician and cryptographer whose research areas are number theory, algebraic geometry, cryptography, coding theory, and machine learning. She is particularly known for her work on Private AI and Homomorphic Encryption, for standardizing and deploying Elliptic Curve Cryptography, and for introducing Supersingular Isogeny Graphs as a foundational hard problem for Post Quantum Cryptography. She is currently the Director of Research Science for Meta AI Research (FAIR) North America, directing FAIR Labs in Seattle, Menlo Park, Pittsburgh, New York, and Montreal. She is also an Affiliate Professor at the University of Washington. She served as President of the Association for Women in Mathematics (AWM) from 2015 –2017, and as the Polya Lecturer for the Mathematical Association of America (MAA) for 2018-2020.

Lauter received her BA, MS, and Ph.D degrees in mathematics from the University of Chicago, in 1990, 1991, and 1996, respectively. She was a T.H. Hildebrandt Research Assistant Professor at the University of Michigan (1996-1999), a Visiting Scholar at Max Planck Institut fur Mathematik in Bonn, Germany (1997), and a Visiting Researcher at Institut de Mathematiques Luminy in France (1999). From 1999-2021, she was a researcher at Microsoft Research, leading the Cryptography and Privacy research group for as Partner Research Manager from 2008—2021.

She is a co-founder of the Women in Numbers (WIN) network, a research collaboration community for women in number theory, and she was the lead PI for the AWM NSF Advance Grant (2015-2020) to create and sustain research networks for women in all areas of mathematics. She serves on the National Academies Committee on Applied and Theoretical Statistics (CATS), and on the Scientific Board for the Isaac Newton Institute in Cambridge, UK. She has served on the Advisory Board of the Banff International Research Station, the Council of the American Mathematical Society (2014-2017), and the Executive Committee of the Conference Board of Mathematical Sciences (CBMS).

In 2008 Lauter and her coauthors were awarded the Selfridge Prize in Computational Number Theory. She was elected to the 2015 Class of Fellows of the American Mathematical Society (AMS) "for contributions to arithmetic geometry and cryptography as well as service to the community." She was an Inaugural Fellow of the AWM (2017). In 2020, Lauter was elected as a Fellow of both the Society for Industrial and Applied Mathematics (SIAM) and the American Association for the Advancement of Science (AAAS). In 2021, she was elected as an Honorary member of the Real Sociedad Matemática Española (RSME).

Automated Cryptographically-Secure Private Computing: From Logic and Mixed-Protocol Optimization to Centralized and Federated ML Customization

Farinaz Koushanfar, University of California San Diego, USA

Over the last four decades, much research effort has been dedicated to designing cryptographically-secure methods for computing on encrypted data. However, despite the great progress in research, adoption of the sophisticated crypto methodologies has been rather slow and limited in practical settings. Presently used heuristic and trusted third party solutions fall short in guaranteeing the privacy requirements for the contemporary massive datasets, complex AI algorithms, and the emerging collaborative/distributed computing scenarios such as blockchains.

In this talk, we outline the challenges in the state-of-the-art protocols for computing on encrypted data with an emphasis on the emerging centralized, federated, and distributed learning scenarios. We discuss how in recent years, giant strides have been made in this field by leveraging optimization and design automation methods including logic synthesis, protocol selection, and automated co-design/co-optimization of cryptographic protocols, learning algorithm, software, and hardware. Proof of concept would be demonstrated in the design of the present state-of-the-art frameworks for cryptographically-secure deep learning on encrypted data. We conclude by discussing the practical challenges in the emerging private robust learning and distributed/ federated computing scenarios as well as the opportunities ahead.

Farinaz Koushanfar is the Henry Booker Scholar Professor of ECE at the University of California San Diego (UCSD), where she is also the founding co-director of the UCSD Center for Machine-Intelligence, Computing & Security (MICS). Her research addresses several aspects of secure and efficient computing, with a focus on hardware and system security, robust machine learning under resource constraints, intellectual property (IP) protection, as well as practical privacy-preserving computing. Dr. Koushanfar is a fellow of the Kavli Frontiers of the National Academy of Sciences and a fellow of IEEE/ACM. She has received a number of awards and honors including the Presidential Early Career Award for Scientists and Engineers (PECASE) from President Obama, the ACM SIGDA Outstanding New Faculty Award, Cisco IoT Security Grand Challenge Award, MIT Technology Review TR-35, Qualcomm Innovation Awards, Intel Collaborative Awards, Young Faculty/CAREER Awards from NSF, DARPA, ONR and ARO, as well as several best paper awards.

Don’t ChatGPT Me!: Towards Unmasking the Wordsmith

Ahmad-Reza Sadeghi, TU Darmstadt, Germany

The hype surrounding Large Language Models (LLMs) has captivated countless individuals, fostering the belief that these models possess an almost magical ability to solve diverse problems. While LLMs, such as ChatGPT, offer numerous benefits, they also raise significant concerns regarding misinformation and plagiarism. Consequently, identifying AI-generated content has become an appealing area of research. However, current text detection methods face limitations in accurately discerning ChatGPT content. Indeed, our assessment of the efficacy of existing language detectors in distinguishing ChatGPT-generated texts reveals that none of the evaluated detectors consistently achieves high detection rates, as the highest accuracy achieved was 47%.

In this talk, we present our research work to develop a robust ChatGPT detector, which aims to capture distinctive biases in text composition present in human and AI-generated content and human adaptations to elude detection. Drawing inspiration from the multifaceted nature of human communication, which starkly contrasts the standardized interaction patterns of machines, we employ various techniques, including physical phenomena such as Doppler Effect, to address these challenges. To evaluate our detector, we use a benchmark dataset encompassing mixed prompts from ChatGPT and humans, spanning diverse domains. Lastly, we discuss open problems that are currently engaging our attention.

Ahmad-Reza Sadeghi is a professor of Computer Science and the head of the System Security Lab at Technical University of Darmstadt, Germany. He has been leading several Collaborative Research Labs with Intel since 2012, and with Huawei since 2019.

He has studied both Mechanical and Electrical Engineering and holds a Ph.D. in Computer Science from the University of Saarland, Germany. Prior to academia, he worked in R&D of IT-enterprises, including Ericsson Telecommunications. He has been continuously contributing to security and privacy research field. He was Editor-In-Chief of IEEE Security and Privacy Magazine, and has been serving on a variety of editorial boards such as ACM TODAES, ACM TIOT, and ACM DTRAP.

For his influential research on Trusted and Trustworthy Computing he received the renowned German “Karl Heinz Beckurts” award. This award honors excellent scientific achievements with high impact on industrial innovations in Germany. In 2018, he received the ACM SIGSAC Outstanding Contributions Award for dedicated research, education, and management leadership in the security community and for pioneering contributions in content protection, mobile security and hardware-assisted security. In 2021, he was honored with Intel Academic Leadership Award at USENIX Security conference for his influential research on cybersecurity and in particular on hardware-assisted security.